Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Tech giants use Multimodal RAG every day in production!

- Spotify uses it to answer music queries

- YouTube uses it to turn prompts into tracks

- Amazon Music uses it to create playlist from prompt

Let's learn how to build a Multimodal Agentic RAG (with code):

Today, we'll build a multimodal Agentic RAG that can query documents and audio files using the user's speech.

Tech stack:

- @AssemblyAI for transcription.

- @milvusio as the vector DB.

- @beam_cloud for deployment.

- @crewAIInc Flows for orchestration.

Let's build it!

Here's the workflow:

- User inputs data (audio + docs).

- AssemblyAI transcribes the audio files.

- Transcribed text & docs are embedded in the Milvus vector DB.

- Research Agent retrieves info from user query.

- Response Agent uses it to craft a response.

Check this👇

1️⃣ Data Ingestion

To begin, the user provides the text and audio input data in the data directory.

CrewAI Flow implements the logic to discover the files and get them ready for further processing.

Check this👇

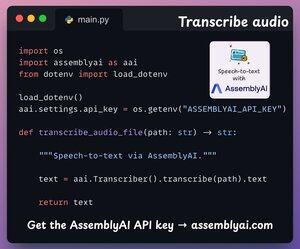

2️⃣ Transcribe audio

Next, we transcribe the user's audio input using AssemblyAI's Speech-to-text platform.

AssemblyAI is not open source, but it gives ample free credits to use their SOTA transcription models, which are more than sufficient for this demo.

Check this👇

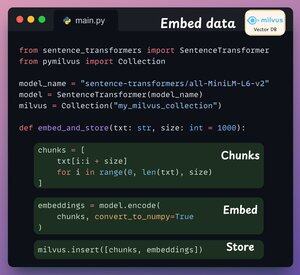

3️⃣ Embed input data

Moving on, the transcribed input data from the above step and the input text data are embedded and stored in the Milvus vector DB.

Here's how we do it 👇

4️⃣ User query

Ingestion is over.

Now we move to the inference phase!

Next, the user inputs a voice query, which is transcribed by AssemblyAI.

Check this👇

5️⃣ Retrieve context

Next, we generate an embedding for the query and pull the most relevant chunks from the Milvus vector DB.

This is how we do it 👇

6️⃣ Generate an answer

Once we have the relevant context, our Crew is invoked to generate a clear and cited response for the user.

Check this 👇

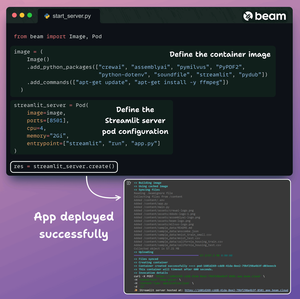

Finally, we wrap everything up into a clean Streamlit interface and deploy the app into a serverless container using Beam.

We import the necessary Python dependencies and specify the compute specifications for the container.

And then we deploy the app in a few lines of code👇

Once deployed, we get a 100% private deployment for the Multimodal RAG Agentic workflow that we just built.

Check this demo 👇

Here's the workflow we implemented:

- User gave data (audio + docs)

- AssemblyAI transcribed the audio files

- Transcribed data is embedded in vector DB

- Research Agent retrieved info from user query

- Response Agent used it to craft a response

Check this👇

If you found it insightful, reshare with your network.

Find me → @akshay_pachaar ✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

5.8. klo 20.30

Tech giants use Multimodal RAG every day in production!

- Spotify uses it to answer music queries

- YouTube uses it to turn prompts into tracks

- Amazon Music uses it to create playlist from prompt

Let's learn how to build a Multimodal Agentic RAG (with code):

106,62K

Johtavat

Rankkaus

Suosikit